When I started looking into metrics a few years ago, I didn't fully understand what they meant and how I needed to use them. But I was so excited that I tried to convince my then colleagues to come up with things they wanted to measure so we, as a team, could track that information and get some answers.

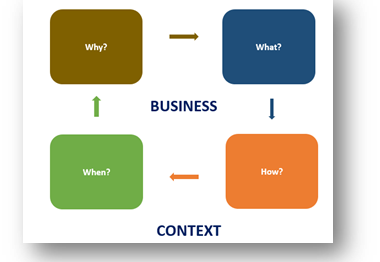

I was so sad that everybody failed to see what a great thing I wanted to do that I got mad: Why didn't they want to do it? After all, it would have helped us all, and in my head, I was contributing to the growth of our product. Time passed, and at some point, this situation popped back into my mind. I stopped for a second and realized that I had missed a few steps. Instead of explaining "why" I wanted to do it, I jumped directly to the "what." The "why" was clear enough for me, but it seems like it was not as clear for my colleagues. To answer "what" metrics you need and if you need them at all, you must first understand "why." Metrics are not our ultimate goal. We don't have to do metrics just because everybody is doing them. We have to use them to learn more about our product, our processes, our development activities’ progress, and how we can improve.

We can compare this situation with a medical health check-up: The doctor recommends a set of tests but not before knowing "why": maybe we have a stomachache, perhaps we've been in a car crash, or maybe it is just a regular check-up. Based on that, the doctor adjusts the "what": what exactly do we need to know? Data about blood tests or maybe about cholesterol and so on. Then the "when" and "how" come into place: you need to do a blood test in the morning, and you can't have any food or drinks before that. Continuing the analogy, there is also a context. Before giving us any treatment, the doctor makes sure that we are not allergic to that particular medicine or we aren't already on a different treatment that will conflict. As we do for the products we work on, so does the doctor. He or she has a business goal: for the patient to be healthy. It's the same situation for a software development context. We want software that satisfies our customers' needs and has the desired quality level.

Many professionals in this industry fail to realize that metrics should always support them in finding out if, in their context, they are taking the proper steps to achieve the specific business goal. Also, another essential thing to mention is that quality is everybody's responsibility. Even if the title says QA metrics, that does not mean that only the test team should develop and monitor such metrics, but rather that the whole team is responsible for it.